Research group digest

My main efforts have gone to getting the SearchLogger server side working. It never worked on Windows but now it works perfectly on Linux. I also reverted from XAMPP back to traditional PHP 5 + Apache 2 + MySQL 5. The setup script worked just fine and no problems persists on the server side.

Client side, the logger itself, is another story. I managed to successfully compile both the single-session and multi-session versions (./compile.sh searchLogger) but there by luck ended. Firefox will not accept the .xpi because something is missing. Has anyone had anything similar?

This post was rather technical and configuration-related. I've also studied Drupal and Wordpress capabilities for the task ahead but this takes a few more hours to complete.

During our research we have recently come across an interesting blog with the title “The Case for a Cloud Computing Price War”, where the author Daniel Berninger argues that Amazon has kept prices for its EC2 offering stable over years, without handing over price performance improvements to its customers. The article further on argues that [...]

Recently a researcher we are working with gave me an VirtualBox image to deploy on EC2 with the fledgling “Desktop-to-Cloud” tool. Discovering that it was 64bit, I had some additional work on my hands. Up until now the migration tool had assumed all source images are 32bit. No problem, I thought, there should only be [...]

- SearchLogger server installation and 'stealing' user authentication for the repository from some CMS

Hi again!

I have been trying to get the searchlogger server working properly on Windows. So far, no luck. I have tested running the setup file on WAMPServer as well as on EasyPHP bundles and it looks ugly. Will try on Linux as well (now that I have Ubuntu 10.10 finally installed ;-)).

Elsewhere, I have also been trying Drupal 7.0, Wordpress 3.1, Joomla 1.6 and Spip 2.1.8 as EasyPHP modules. Drupal and Wordpress can certainly provide authentication capabilities for the website. Not sure about Joomla ad Spip at this stage yet.

Some interesting findings: 1) A guy integrates his ZenPhoto with Wordpress in a way that if you are logged into WordPress, you are also logged into ZenPhoto. See how! This is rather out-of-date though. 2) Drupal authentication is not clear to me yet even though it exists. I did not stumble upon good tutorials on this but I will keep searching.

Hey! I have to say, that nothing will be done on the project this week, since I go to St. Petersburg for a weekend. Sorry :)

Database replication is dealing with management of data copies on different nodes. The main challenge of database replication is to keep copies consistent and to provide a correct execution on each node of the system. Database replication can be used for fault-tolerance and high availability of the system, therefore primary purpose of database replication is to increase the performance and improve scalability of database engines.

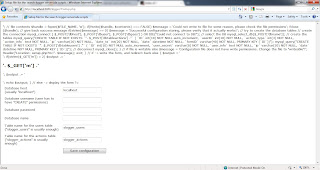

In our project (at least, at first) a simple master-slave approach will be implemented. Changes made to the master server will be copied to the slave. To achive that, the following steps have to be made:

- Some technical details: we use MySQL database here. CloudEco machine is used as a master database, host machine as a slave.

- First, we need to configure the network adapter for CloudEco machine. Since we gonna use host machine as a slave, we have to set it to Host-only and it's type to Paravirtualized network. I'm not saying that this is the only available option, you can use NAT adapter as well, but it may cause some trouble (like dynamic IP).

- Now, let's configure the master machine. Edit /etc/mysql/my.cnf file:

- Comment out the following lines (to allow connection from other machines):

> >>> >>_#skip-networking_>>> >>>

> >>> >>_#bind-address = 127.0.0.1_>>> >>>

- Add following lines:

> >>> >>_log-bin = /var/log/mysql/mysql-bin.log_>>> >>>> >>> >>_ binlog-do-db=tpcw-db_>>> >>>> >>> >>_ server-id=1_>>> >>>

- Then we restart MySQL service_ /etc/init.d/mysql restart_

- Login to mysql shell to create user with replication privileges

> >>> >>_GRANT REPLICATION SLAVE ON *.* TO root@192.168.56.1 IDENTIFIED BY password; FLUSH PRIVILEGES; _>>> >>>

- Moving on to configuring the slave. Add the following lines to_ /etc/mysql/my.cnf_:

> >>> >>>>> >>>_server-id=2 master-host=192.168.56.101 master-user=root master-password=password master-connect-retry=60 replicate-do-db=tpcw-db_>>>> >>>>

- Restart the service /etc/init.d/mysql restart

- Login to the mysql shell and run follwoing command:_ _

> >_CHANGE MASTER TO MASTER_HOST='192.168.56.101', MASTER_USER='root', MASTER_PASSWORD='password', MASTER_LOG_FILE='mysql-bin.006', MASTER_LOG_POS=183; _>> >>

- To test, if everything is configured correctly, you can try to access master databse from the slave machine by using mysql -h192.168.56.101 -u root -p command.

- Now, we need to load the initial database to the slave. There are two ways to do that. First is to create a dump file (mysqldump -u root -opt tpcw-db > tpcw-db.sql), second is to log on to the MySQL shell and run LOAD DATA FROM MASTER command. In the second option, an error may occur saying "Access denied; you need the RELOAD privilege for this operation". This means that you did not grant the privileges to the slave user.

- Now start the slave:_ _

> >>> >>>>> >>>_START SLAVE;_>>>> >>>>

- Now it's time to check if replication is working. Make some changes to the master database and check if slave database has changed. If nothing is going on, make sure that you have started the slave.

And here comes the bonus!

The following are a few lessons learned about migrating a VM to EC2 in the past 2 weeks. These notes are particular to dealing with an Ubuntu VirtualBox image. Hopefully they will provide some tips/guidance to others who are undertaking the same task. 1. Transforming a VM image on OSX can be very cumbersome / [...]

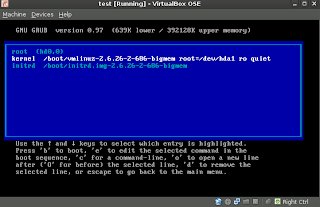

With the plan ready somehow and new laptop provided, it is time to start some real work. Operation system chosen, network connection shared, VirtualBox installed, CloudEco image converted to vdi format. But, wait? Nothing is working! Virtual machine started to boot and then left me with the message "Loading, please wait" forever. After some days of thinking what is wrong and some unsuccessful tries, it was discovered that there was error in the GRUB.

| [](http://4.bp.blogspot.com/-T-KIfe2JTbc/TV_aPp0--aI/AAAAAAAABes/Lzrk6dFsUGQ/s1600/grub.png) |

| Boot options in GRUB |

System tried to boot /dev/hda1 as a root, but failed to find it. Why it was /dev/hda1 in the first place, I personaly don't remeber, though my supervisor had some explanation for this. It is easily solved by the following actions:

- Change_ /dev/hda1_ to /dev/sda1(you can also remove quiet option to see the log)

- Boot the machine

- Delete /boot/grub/menu.lst

- Run update-grub command to regenerate menu.lst file

So, now virtual machine is running and it is time to start with database replication :)

In recent years large investments have been made to build data centers, or server farms, purpose-built facilities providing storage and computing services within and across organizational boundaries. A typical server farm may contain thousands of servers, which require large amounts of power to operate and to keep cool, not to mention the hidden costs associated [...]

Cloud providers, like Amazon, offer their data centers’ computational and storage capacities for lease to paying customers. High electricity consumption, associated with running a data center, not only reflects on its carbon footprint, but also increases the costs of running the data center itself. In “Maximizing Cloud Providers Revenues via Energy Aware Allocation Policies” we address [...]

Scientists and researchers may develop number-crunching programs on a personal machine, but lack the technical means to scale the architecture to deal with meaningful datasets efficiently. I would like to introduce the “Desktop to Cloud”, a project recently begun that aims to address roadblock. Our goal is to provide tools that push and expand an [...]

Hello and welcome to my blog! I am Peeter Jürviste and over the coming weeks I will be posting about the progress made and problems encountered in developing a search task repository. It is a case study in collaborative search and an interesting challenge for me and hopefully the working solution will make your complex search tasks much easier and less time consuming.

Please also visit the Distributed Systems Group site from University of Tartu.

My next post will be about tasks to be solved and technologies used in the project.

Bye!

Starting this blog, I feel a bit like Carrie Bradshaw, in some way we write about the same topic :)

This blog is created with a purpose to describe progress on my master thesis and, by chance, my project for seminar on distributed systems. Project's current name is "Cost prediction of moving three-tier applications to the clouds". The goal is to predict how many resources are used by specific application and what type of machine is needed to run it.

Current plan on how to carry out the project is: Step 1. Run load tests on CloudEco project's machine, with and without database replication. Compare the results. Step 2. Install osCommerce online shop applications, run tests similar to the ones from the step 1. Compare the results. Step 3. Move both images (from step 1 and step 2 accordingly) to the cloud, run tests there, compare the results :) Step 4. Come up with some formula Step 5. PROFIT!

Since I'm using new computer and I was careless enough to ignore the backup possibility, this week I'll be restoring the state I had last semester. The details will follow in the next post.

Stay tuned and have a nice day!

We have recently written a scientific article on “Towards a Model for Cloud Computing Cost Estimation with Reserved Instances”. Cloud computing has been touted as a lower-cost alternative to in-house IT infrastructure recently. However, case studies and anecdotal evidence suggest that it is not always cheaper to use cloud computing resources in lieu of in-house [...]

We have recently taken a look at how easy it would be to shop at Amazon cloud computing offerings. Let’s assume the following scenario. We are running an e-commerce site with all the IT in house. Now we are considering to migrate to the cloud and Amazon seems to be an interesting partner. We have [...]

Comments